Primary Tasks 🎯

First part: generate embeddings for the source data and store in vector database

Second part: Handling query made by user (once vector store for source data has been created or loaded)

Highly optimized content-aware chatbot to query PDF files using embeddings and chat completion APIs of Open AI

Pranav Kural

May 16, 2023

ChatGPT, Open AI, Machine Learning, Artificial Intelligence, Chat Completion, Embeddings, chatbot

This article will demonstrate a model for building highly optimized, cost-effective and content-aware chatbots for querying PDF or text files. We’re going to setup a basic API that will allow us to link a PDF file and ask natural language queries related to the information in the provided document.

For the sake of simplicity, we’re building this project specifically for PDF files, but the model and the architecture described can be used for any other types of documents (basically, anything that can be parsed as textual data).

Let’s dive in 🚀

What are we trying to achieve? And how will we achieve it?

I like to start with a vision for the end-goal and go backwards, breaking down what needs to be done.

Without adieu, here’s what the API should do when completed:

Using the create endpoint, user provides URL to the PDF file to initiate the creation of a vector store for the data in that file. This vector store will then be stored in Pinecone and will be queried when user makes queries.

/create?document_url={document_url}If an index already created, user can use the load endpoint to initiate the process of loading the existing vector store from Pinecone

/loadUser can also add more documents or source data by using the update endpoint

/update?document_url={document_url}To make a query and get response, we query endpoint:

/query?q={query text}First part: generate embeddings for the source data and store in vector database

Second part: Handling query made by user (once vector store for source data has been created or loaded)

If you’d like to have a look at the code or even deploy your own version of this project locally or remotely, here is the link to project’s Github repository which also lists instructions of usage.

Python is the choice of programming language used for the project since a lot of machine learning tools, libraries and frameworks natively support Python.

Tools we would be using primarily:

| Tool | Purpose |

|---|---|

| LlamaIndex | Create index for supplied source data (we later run the query on an index). Creation of such indices handle context storage, prompt limitations and text-splitting under the hood. We’ll see later why this is useful. |

| Open AI | Provides API for embeddings generation and chat completion |

| Pinecone | Hosted, cloud-native vector database with simple API |

| Fast API | Framework to quickly build simple yet robust RESTful API in Python |

| Uvicorn | An ASGI web server implementation for Python |

The most crucial thing for this API to work is the vector store storing embeddings for our source data (PDF file), and the below diagram displays the basic flow for handling the create and update endpoints.

Let’s break each major step down and study how it works?

GET or POST request on create or update endpoint and provide URL to the PDF documentcreate endpoint being used: delete existing Pinecone index (index name given in params.py), and re-create new indexupdate endpoint being used: update the existing Pinecone indexPlease note that this document is an excrept from the original document that was sourced from internet. Original source: SOP for Quality improvement - Quality Improvement Secretriat - MOHFW

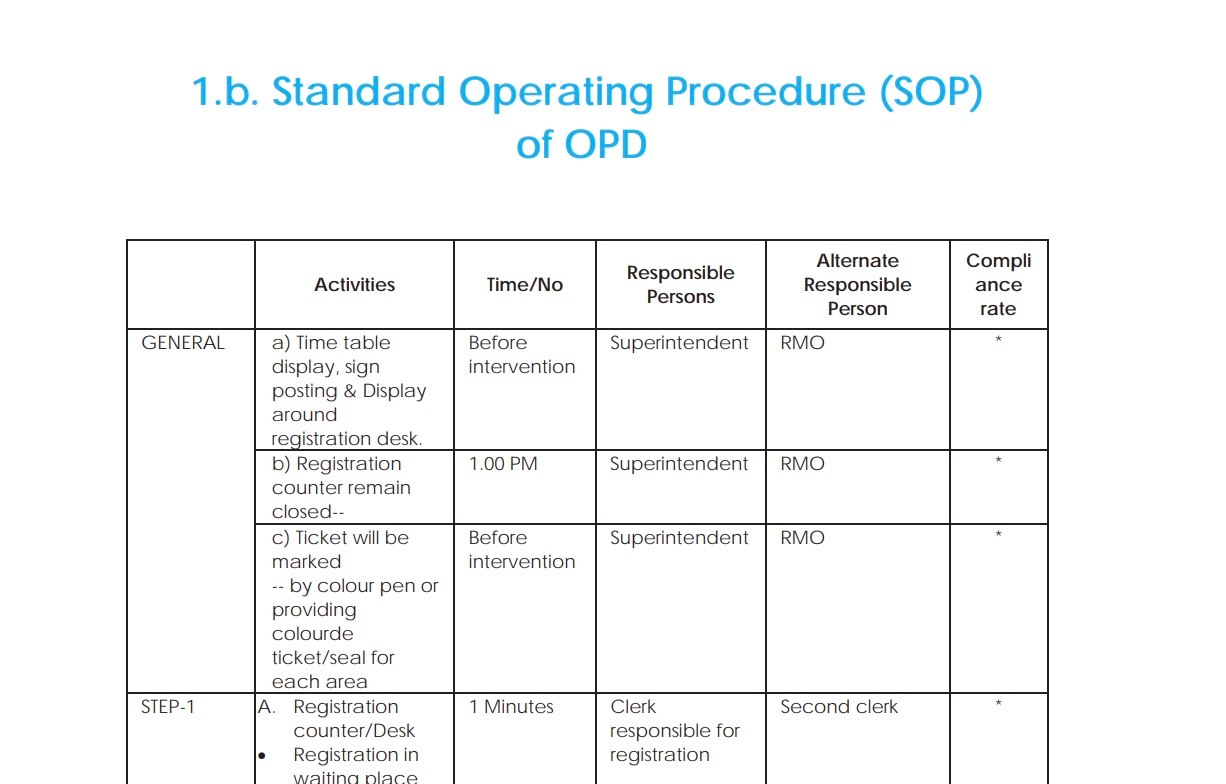

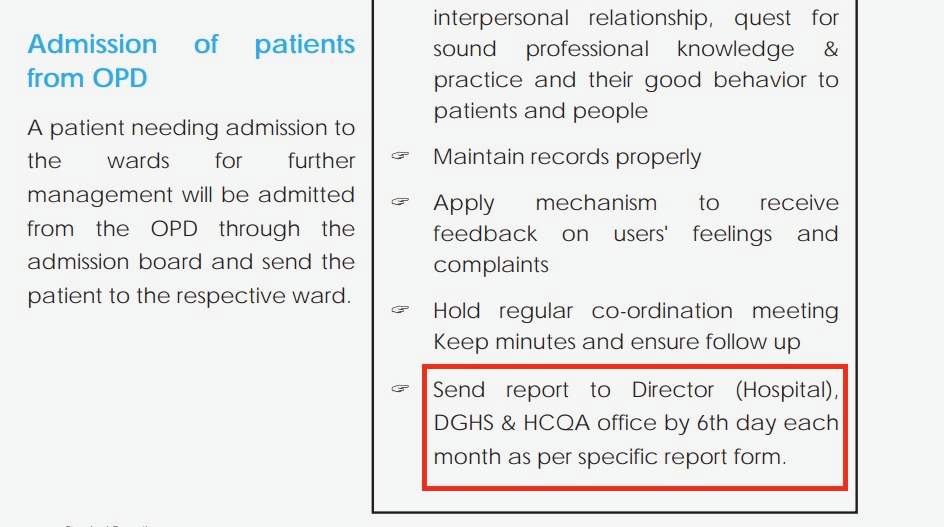

Sample of source data:

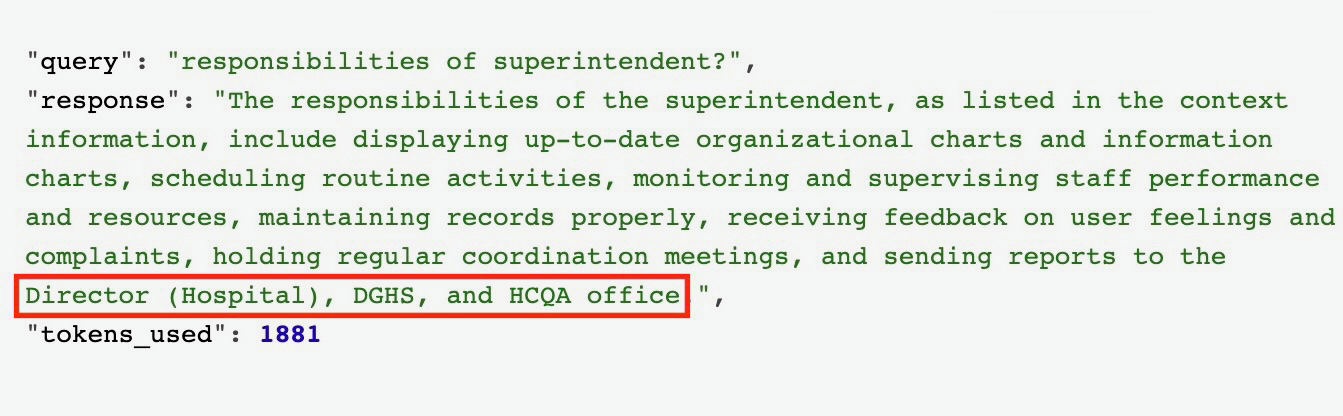

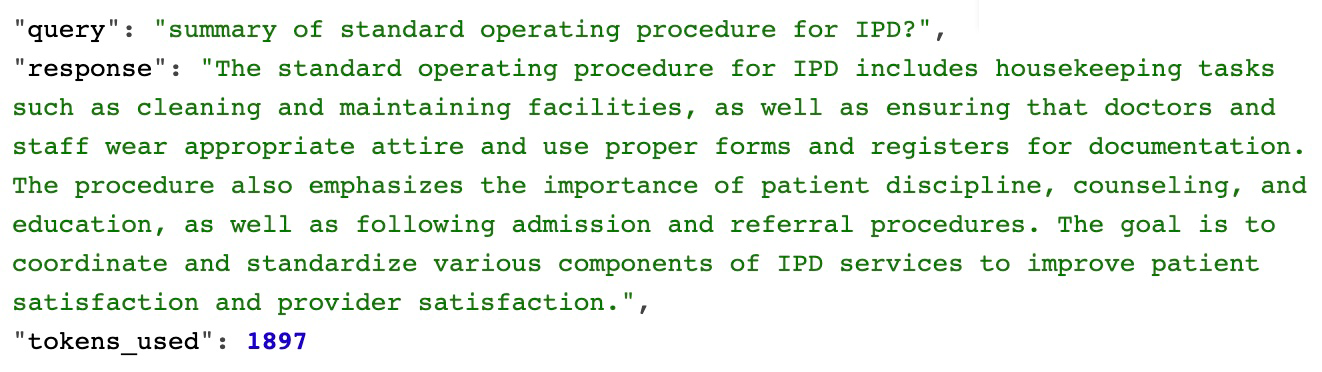

You’ll notice in the response to the first test query that the response has been generated entirely from the source data and in natural language.

portion of original source document

This credibly proves that our program was able to extract a relevant portion of data from all of our source data that related most to the query being asked, and fed it to the chat completion (ChatGPT) model to generate a natural language response 🎉

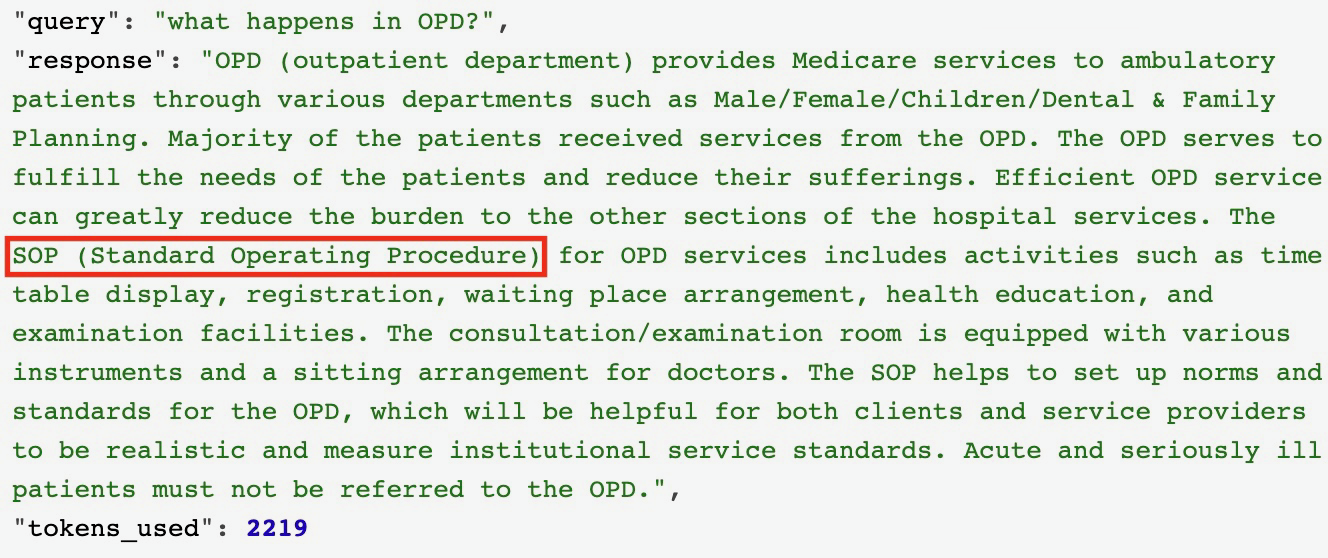

Let’s take it up a notch and attempt a query which would require processing of a substantial portion of the source document:

This project demonstrated a frictionless and efficient approach to building highly-optimized API for interacting with and extracting information from PDF documents. The resulting API application provides the experience alike a content-aware chatbot, specifically trained to return responses from provided source data using its cutting-edge natural language processing capabilities.

The illustrated PDF-GPT modal parses the source data, creates and manages indices using LlamaIndex, generates embeddings using Open AI, and stores the embeddings in the Pinecone vector database. We showcased the efficacy of the model by testing it with a sample PDF document and querying relevant information from the source data.

With its optimized architecture and integration with powerful tools like LlamaIndex, Langchain, Open AI, Pinecone, Fast API, and Uvicorn, PDF-GPT offers a reliable and user-friendly modal for building applications which can enable interaction with documents using natural language.

Though we only used PDF documents, the approach implemented by PDF-GPT can be used to build modals for processing any type of textual source, like other document types (word, markdown, etc.), webpages (textual data extracted using web scraping), or data from a database (textual data extraction using database connectors). Not going to let out too much details at the moment but I might already be in the process of building something like this.. more deets coming soon 😉

Until then, I hope you gained some insight from this project, and I wish you best of the luck for your endeavours! Looking forward to what you might build with this! 😄