What is Multimodality?

Modality: mode + ability - the ability to represent data in different modes (i.e., types like text, image, audio etc.)

Unimodal Machine Learning: ML models and frameworks that deal with a single modality. Example: The revolutionary ChatGPT (based on GPT-3.5), which works with textual data only.

Multimodal Machine Learning: ML models and frameworks that work with multiple modalities or mediums of data representation like text, image, audio, video, etc.

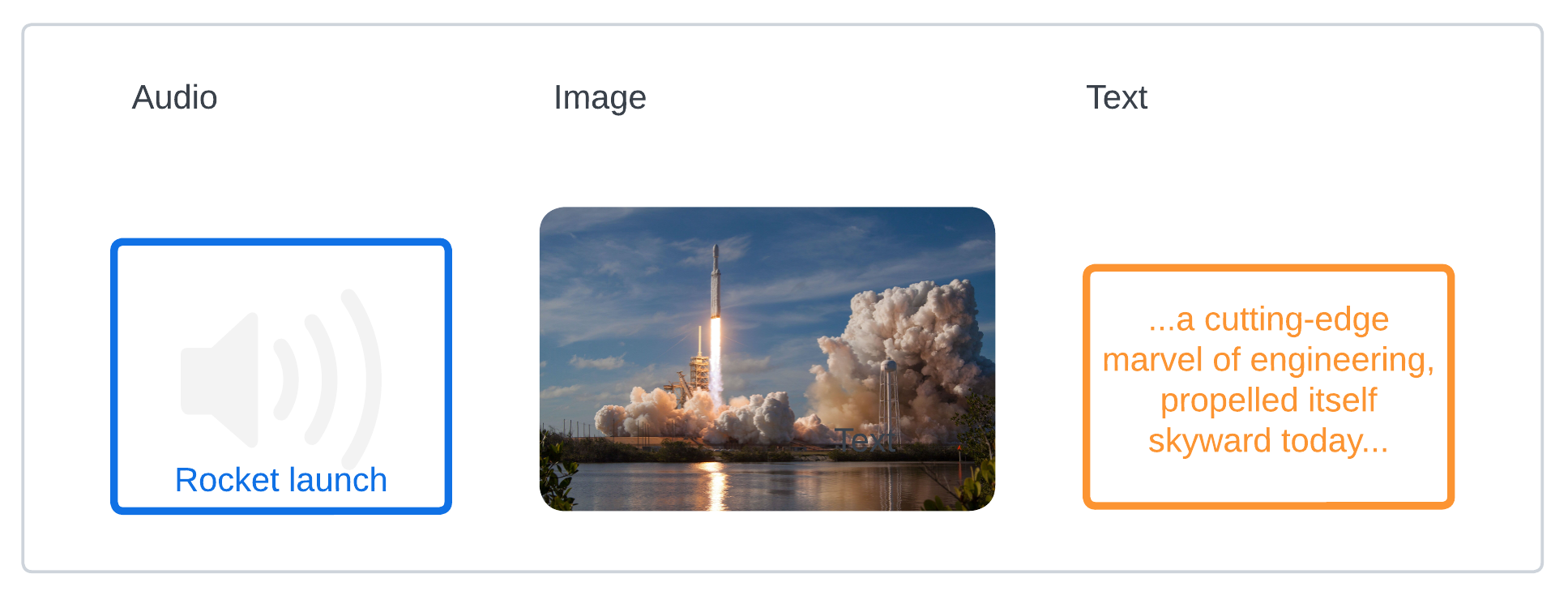

Such models are capable of connecting the dots between objects of different kinds, or even generate contextually related content of different forms given an object of a certain supported kind. For example, by using a text describing the launch of a rocket into the space as an input to Meta AI’s ImageBind model, we can generate content in five different modalities, i.e., the model will automatically generate an image demonstrating the launch of rocket into space, an audio in the same context, even possibly a video displaying the motion, and more! 🤯

multiple mediums of data respresentation

How we create multimodal ML models?

There are multiple approaches that unable the creation of efficient multimodal machine learning models and frameworks. Let’s start from the basics so we don’t leave anyone out.

One True Modality

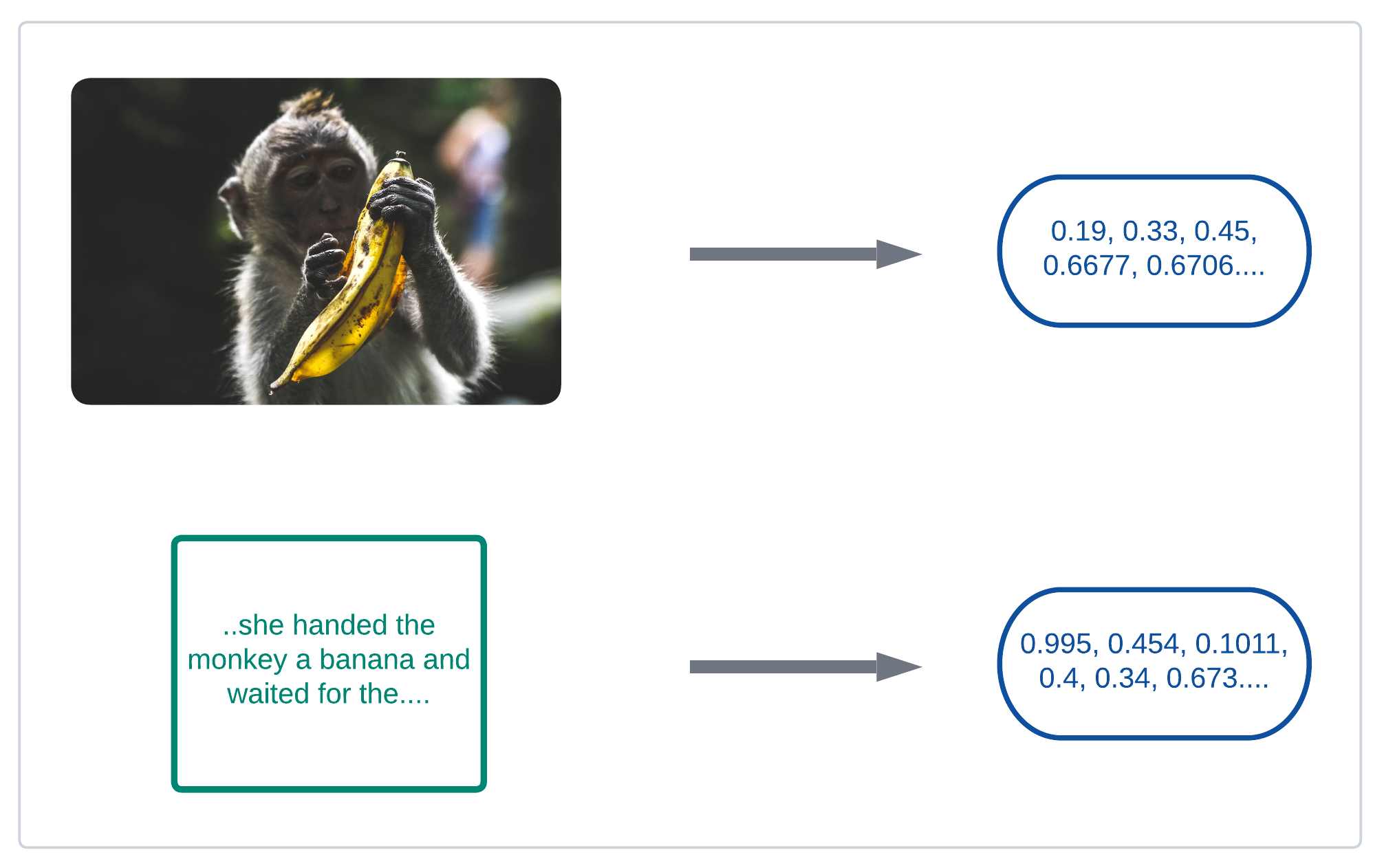

To perform comparison and to establish some sort of similarity between data of different types, we need a kind of structure to which all these data types can be converted to, so we have a way of deducing similarity or dissimilarity.

Simple example: Below image shows textual and image data being converted to a bunch of numbers.

conversion from different mediums of data representation to one single medium of data representation

There are several ways of representing these bunch of numbers, and one of the most common ways is representing them as vectors.

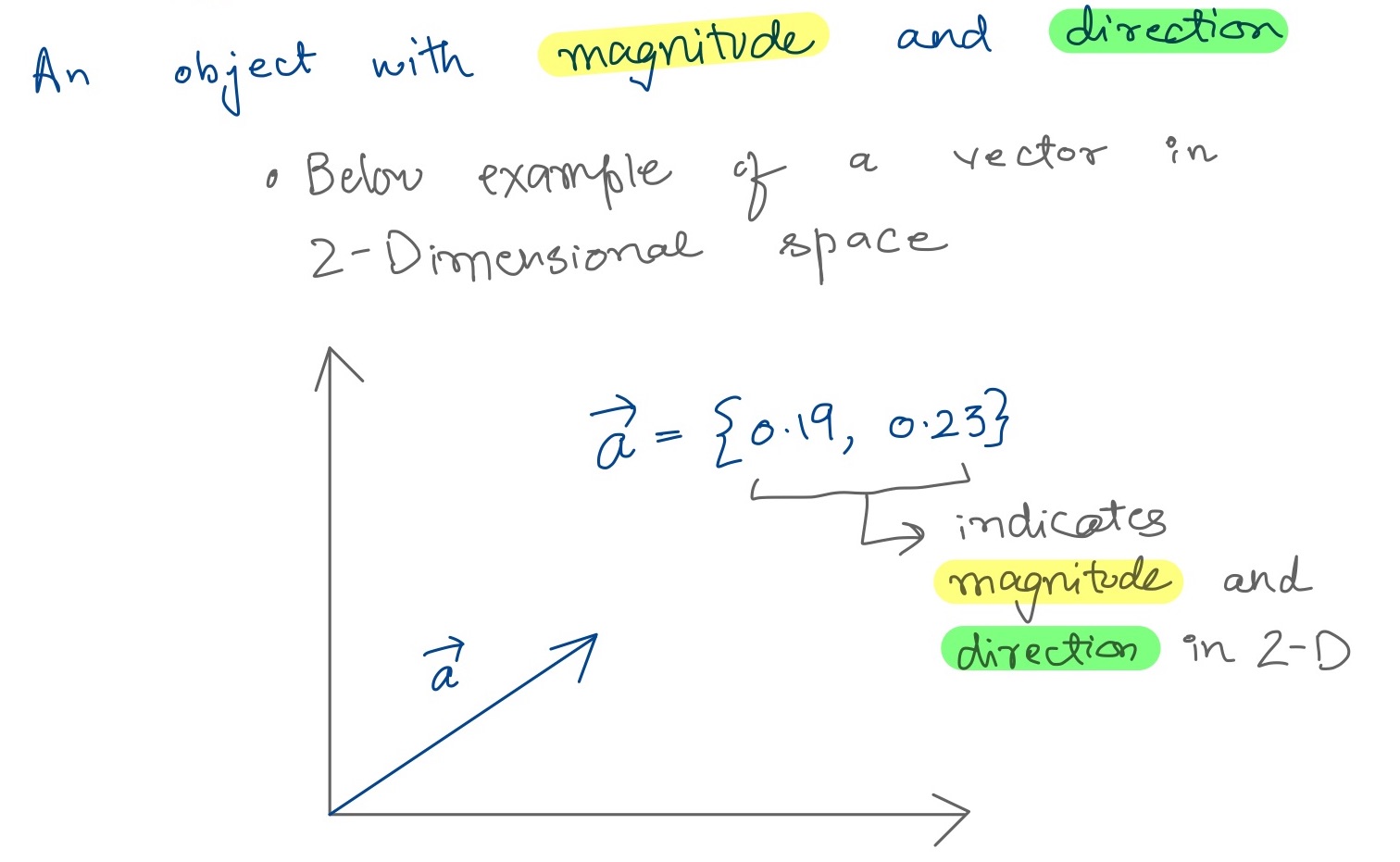

Vectors

Vector Embedding

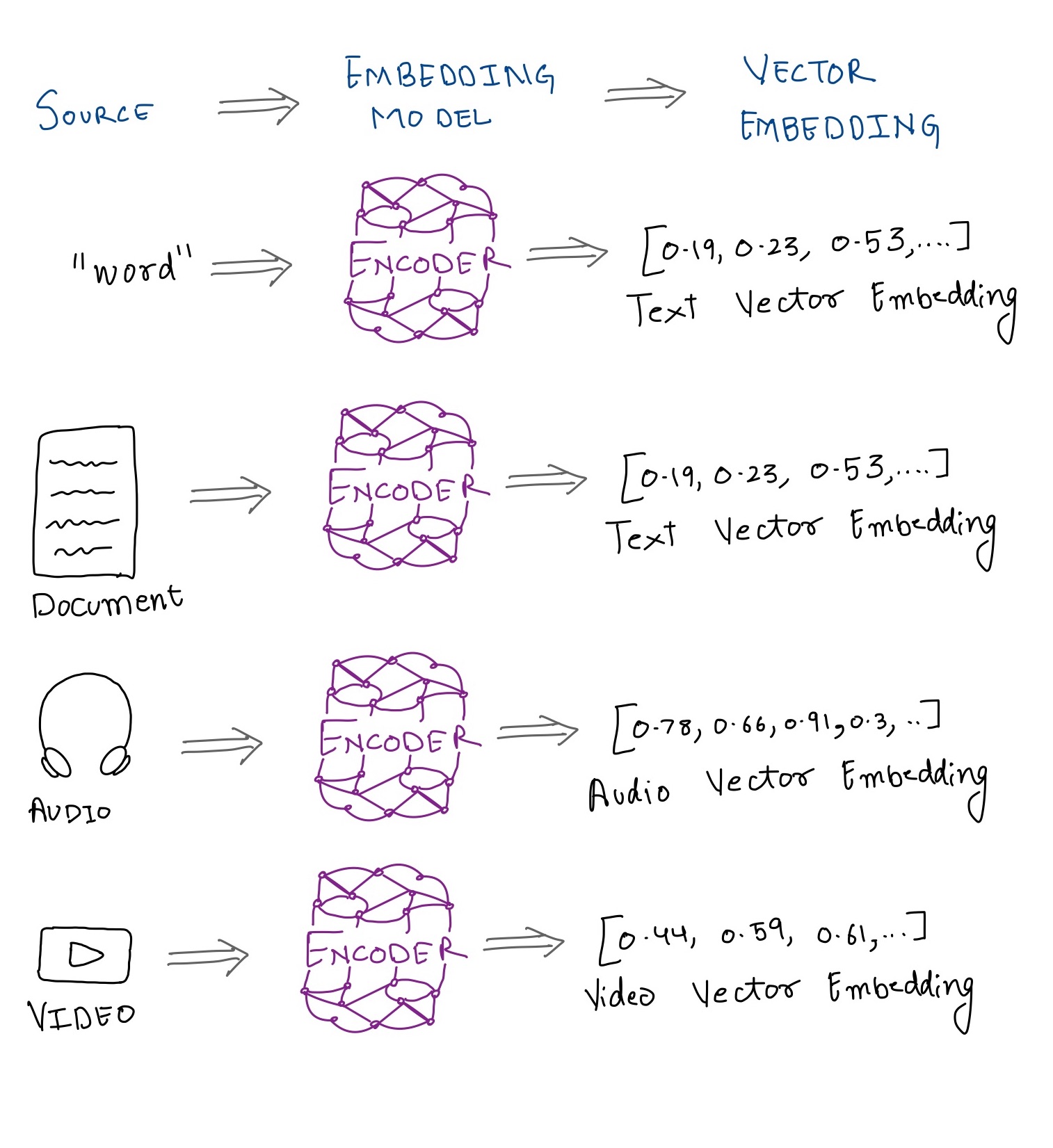

The conversion of data from one medium to a vector representation is known as generation of vector embeddings

Making the connection

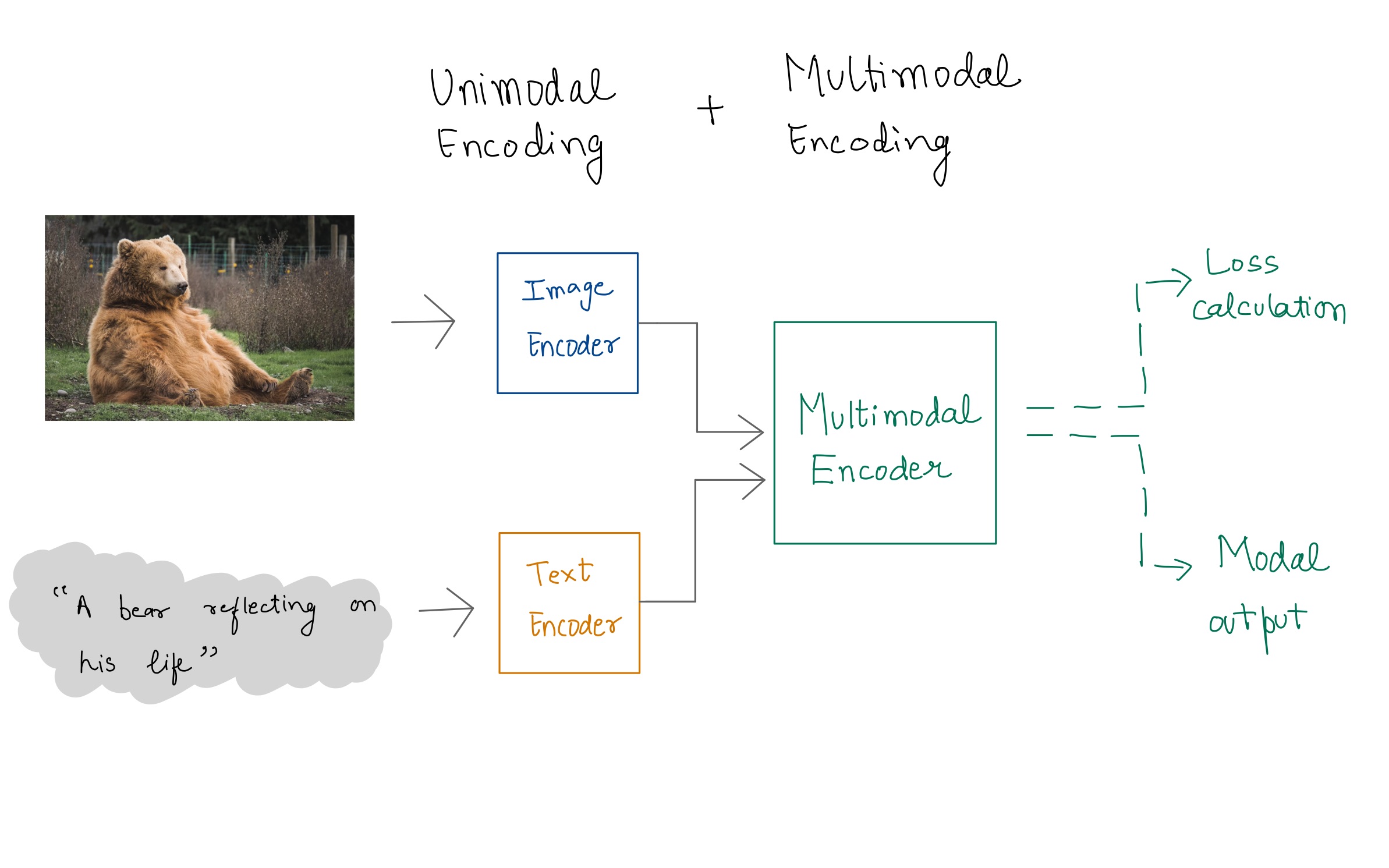

We can use this approach to create models which can generate embeddings for multiple types of data and then use a singular model (like a multimodal encoder shown below) to establish a relationship between these embeddings. Distance between two vectors in a vector space help us establish a magnitude of similarity or contrast between two objects. The closer the vector representations of two objects are the more contextually related they are likely to be.

Note that the above image is just for demonstration. The underlying process for achieving this could be much more complex. To see a more real world example, you can check this research paper on Meta AI’s FLAVA model: FLAVA: A Foundational Language And Vision Alignment Model

Contrastive Learning

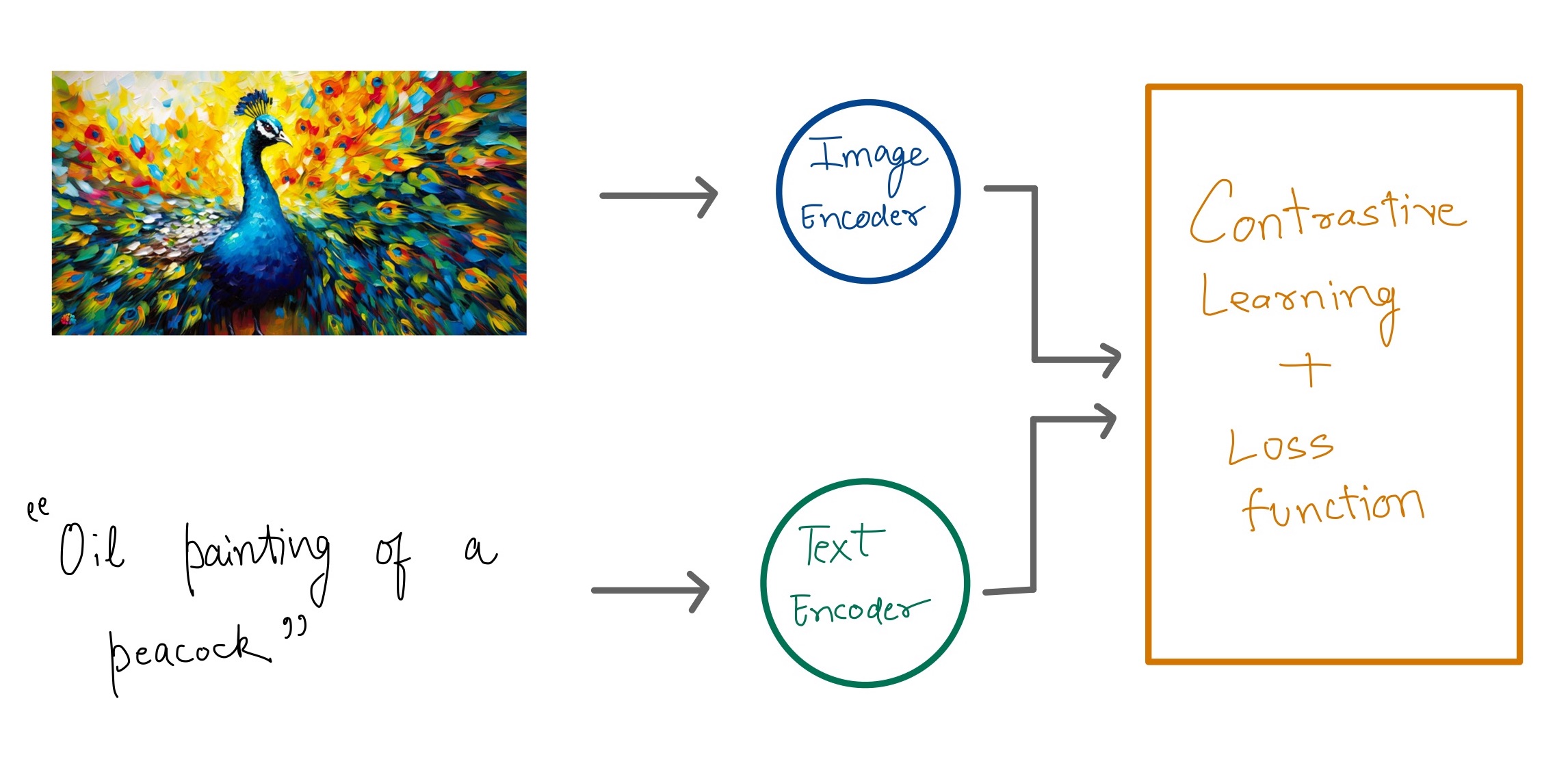

Contrastive Learning is a common approach used when training models to work with multiple modalities at once.

Simply put: the contrastive implies contrast, i.e., we train model with both positive and negative examples (generally, more negative examples than positive).

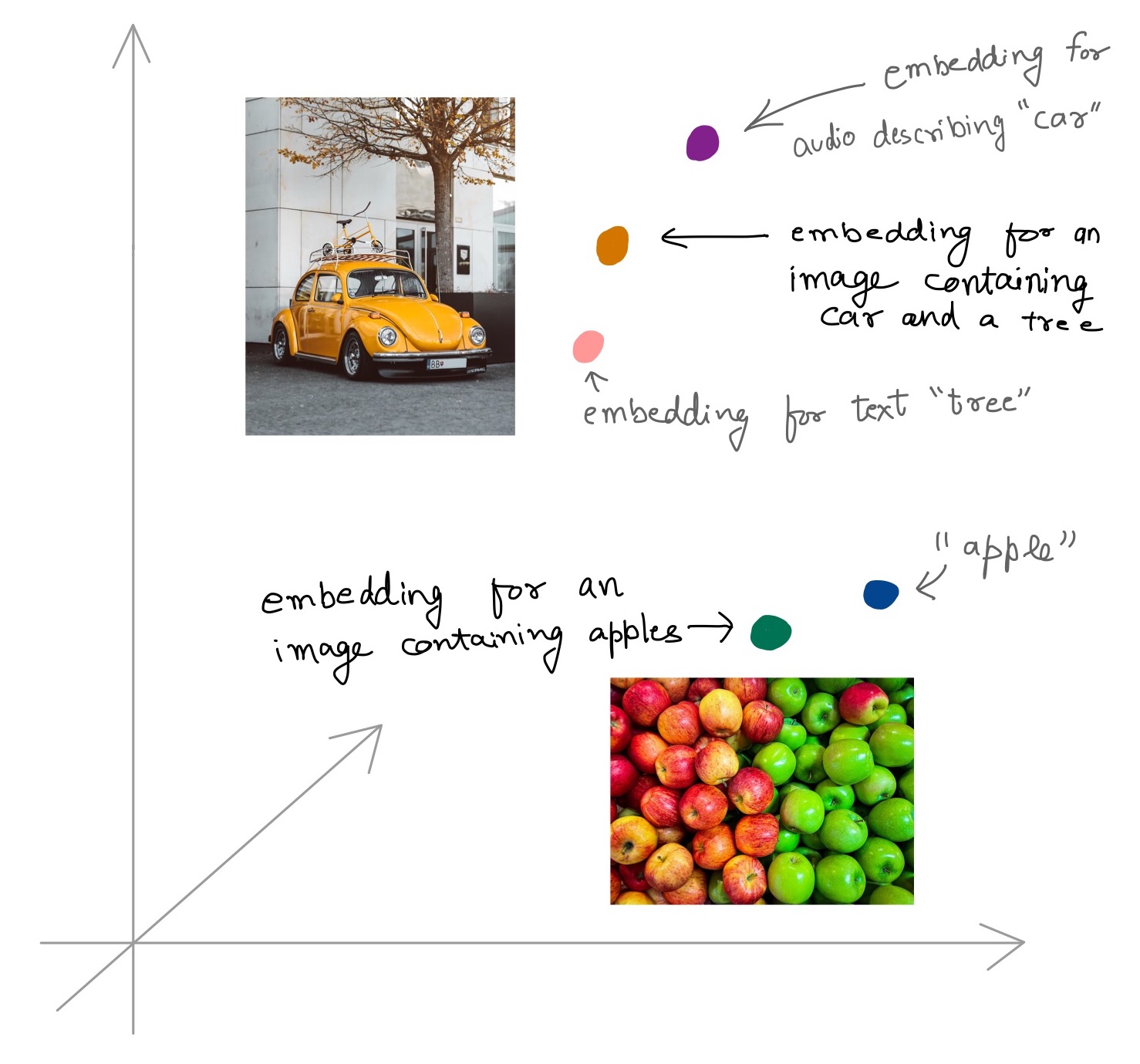

The main idea is that given a vector space and vector embeddings generated by the model, vector embeddings for those data which are closely related (example: image of an elephant and a text containing the word ‘elephant’) should be placed closely, and vector embeddings for those data which are NOT closely related should be far away from each other.

Below image gives an idea of how such a 3-D vector space might look:

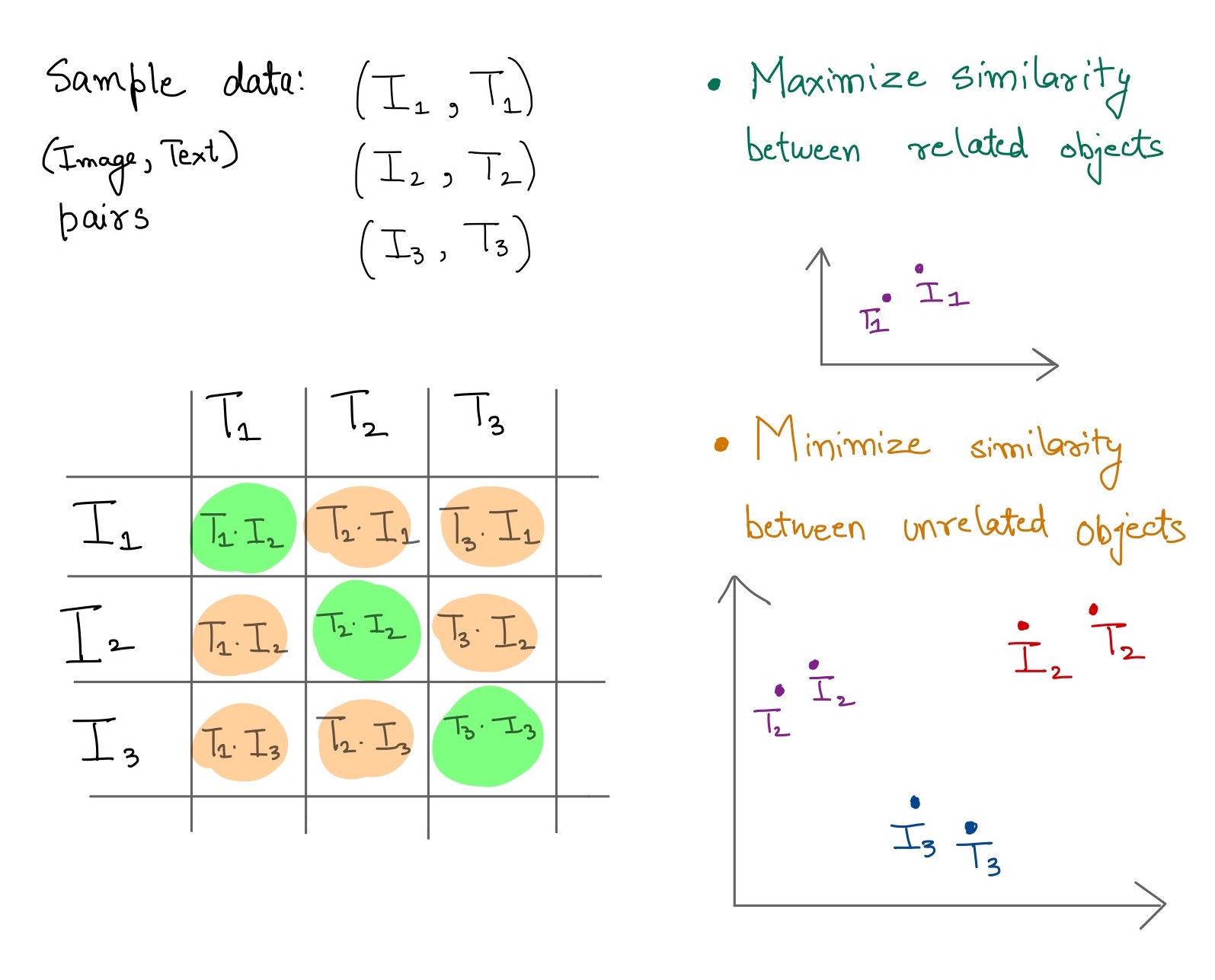

Taking example of a ML model which works with two modalities such as text and image, to enable such contrastive learning, we require large amounts of data in form of text and image pairs, which could be used to train the model.

For example, let’s say \(I_1\) is a image containg a car, and \(T_1\) is a text that mentions a car, and \(T_2\) is another text that doesn’t mention car anywhere, ideally, our model should learn over time to create vectors where the distance between vectors generated for \(I_1\) and \(T_1\) much lower, than the distance between vectors \(I_1\) and \(T_2\), therefore, letting us deduce (later on) which data objects maybe more closely related.

The above mentioned approach and image was inspired from Open AI’s CLIP model. To learn more about it, you can check this wonderful article on Pinecone: Multi-modal ML with OpenAI’s CLIP

Conclusion

There is a lot you can do with simple things than what we can imagine of. A very basic data structure like a vector could be used to create such mammoth and robust multimodal machine learning models, empowering applications in domains unexplored till now.

I hope through this quick visual guide you were able to gain some basic familiarity with how things are working under the hood when using a multimodal machine learning tool. Happy exploring! 🫶🏼

Suggested further readings:

- Meta AI’s multimodal ML models and research work

- To know more about contrastive learning, check: Contrastive Self-Supervised Learning